Artificial Intelligence(AI) refers to developing and implementing computer systems capable of performing tasks that typically require human intelligence. It is an interdisciplinary field encompassing various approaches, techniques, and technologies to create intelligent machines capable of perceiving and understanding their environment, reasoning, learning, and making informed decisions.

At its core, AI involves the creation of algorithms and models that enable computers to simulate human cognitive processes such as problem-solving, pattern recognition, language understanding, and decision-making. These algorithms are designed to analyze and interpret vast amounts of data, extract meaningful insights, and adapt their behavior based on experience and feedback.

AI

The field of AI encompasses multiple subfields, including machine learning, deep learning, natural language processing, computer vision, robotics, and expert systems. These subfields employ different methodologies and techniques, such as neural networks, statistical modeling, optimization algorithms, and symbolic reasoning, to achieve specific AI objectives Overall; AI seeks to create intelligent systems that can assist and augment human capabilities, automate repetitive or complex tasks, provide personalized recommendations, optimize decision-making processes, and ultimately enhance various aspects of human life across industries, including healthcare, finance, transportation, entertainment, and more.

History of AI

The world of Artificial Intelligence (AI) has witnessed a remarkable journey, transforming the way we live and work. Join me on a quick tour through the captivating history of AI, where human ingenuity and technological advancements converge.

The seeds of AI were sown in the 1950s when computer scientists and mathematicians began exploring the concept of “thinking machines.” Inspired by the human brain, these pioneers aimed to develop machines that could mimic cognitive abilities.

Researchers in the 1960s and 70s focused on creating rule-based systems and symbolic AI, paving the way for expert systems. These early AI systems aimed to replicate human expertise in specific domains and solve complex problems using logical rules and knowledge representation.

History of Artificial Intelligence

The 1980s brought a paradigm shift with the emergence of machine learning. Instead of explicitly programming rules, scientists developed algorithms that enabled computers to learn from data and improve their performance over time. This led to breakthroughs in pattern recognition, natural language processing, and computer vision.

The 1990s witnessed a surge in AI applications with the rise of the internet and the availability of vast amounts of data. AI-powered search engines, recommendation systems, and spam filters became integral parts of our online experience, revolutionizing how we access and interact with information.

The dawn of the 21st century brought AI to our fingertips, thanks to smartphones’ proliferation and big data’s birth. Companies leveraged AI to enhance user experiences, personalize content, and develop virtual assistants like Siri and Alexa, transforming our interactions with technology.

Fast forward to the present, and AI has become an indispensable part of our daily lives. AI algorithms power social media feeds, autonomous vehicles navigate our roads, and AI-powered healthcare solutions offer personalized diagnostics and treatments. The possibilities are truly endless!

As we embark on the future, AI continues to evolve astonishingly. Advancements in deep learning, reinforcement learning, and neural networks are pushing the boundaries of AI capabilities. Ethical considerations and responsible AI development have also taken center stage, ensuring that AI benefits humanity while mitigating potential risks.

How does AI work

AI, or Artificial Intelligence, is a field that focuses on creating computer systems capable of performing tasks that typically require human intelligence. The workings of AI systems can vary depending on the specific techniques and methodologies employed, but here is a general overview of how AI works:

how does work Artificial intelligence?

Data Collection

AI systems require significant data to learn and make intelligent decisions. This data can come from various sources, such as sensors, databases, or the internet. The quality and quantity of data play a crucial role in the effectiveness of AI models.

Data Preprocessing

Before feeding the data into AI algorithms, it often needs to be preprocessed to remove noise, handle missing values, normalize the data, or perform other necessary transformations. This step ensures that the data is in a suitable format for analysis.

Training Phase

The training phase involves using machine learning algorithms to train an AI model. During training, the model is expos to labeled examples or historical data, allowing it to learn patterns, relationships, and features relevant to the task. This process involves adjusting the model’s parameters or weights to minimize errors and optimize performance.

Algorithms and Techniques in Artificial Intelligence

AI systems employ various algorithms and techniques depending on the problem domain. These may include statistical methods, decision trees, support vector machines, neural networks, or advanced deep learning techniques. Each algorithm has strengths and weaknesses and is selected based on the specific task and available data.

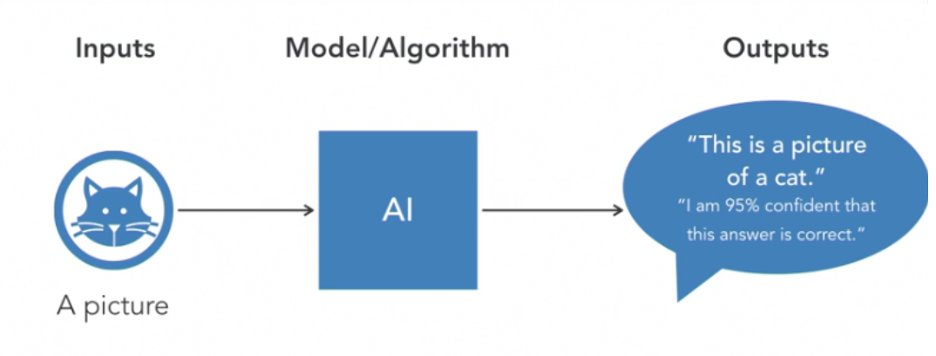

Inference Phase

Once the AI model is trained, it can be used for inference or prediction. During this phase, the model takes input data and generates an output or prediction based on the learned patterns. The model’s decision-making process may involve complex computations, logical reasoning, or probabilistic calculations, depending on the nature of the problem.

Feedback and Iteration

AI systems can improve their performance through feedback and iteration. Errors or inaccuracies can be identified by comparing the model’s predictions with known outcomes or human-labeled data. This feedback is used to refine the model, update its parameters, and improve its accuracy over time.

Deployment and Integration

Once an AI model has been trained and validated, it can be deployed and integrated into various applications or systems. This can involve creating APIs or interfaces that allow other software to interact with the AI system, enabling real-time decision-making, automation, or intelligent recommendations.

It’s important to note that AI is a vast and evolving field, and the specific workings of AI systems can vary depending on the techniques, algorithms, and technologies employed. Different AI approaches, like symbolic reasoning or evolutionary algorithms, may have distinct processes and mechanisms. However, the general framework described above provides a high-level understanding of how AI systems are developed and function.

Key Components of Artificial Intelligence

AI, or Artificial Intelligence, is a complex field encompassing various components and technologies. Here are the key features of AI:

components of AI

Machine Learning

Machine learning is a subset of AI that focuses on enabling computers to learn from data without explicit programming. It involves the development of algorithms that can automatically analyze and extract patterns, relationships, and insights from large datasets. Machine learning algorithms can be classified into different types, including supervised learning (where models learn from labeled data), unsupervised learning (where models discover patterns in unlabeled data), and reinforcement learning (where models learn through interactions with an environment).

Neural Networks

Neural networks are a crucial component of AI, inspired by the structure and functioning of the human brain. These networks consist of interconnected nodes or artificial neurons called “artificial neurons” or “perceptrons.” Neural networks excel at pattern recognition, image and speech processing, and natural language understanding. Deep learning, a subset of neural networks, involves building and training networks with multiple layers, enabling them to learn hierarchical representations of data.

Natural Language Processing (NLP) enables machines to understand and process human language. It involves techniques and algorithms that allow computers to interpret, analyze, and generate human language, facilitating tasks such as language translation, sentiment analysis, chatbots, and speech recognition. NLP incorporates various components, including syntactic and semantic analysis, part-of-speech tagging, named entity recognition, and language generation.

Computer Vision

Computer vision focuses on allowing machines to understand and interpret visual information from images or videos. It involves techniques such as image recognition, object detection, image segmentation, and image generation. Computer vision has applications in areas such as autonomous vehicles, medical imaging, facial recognition, and surveillance systems.

Robotics

Robotics is the field of AI that deals with designing and developing intelligent machines capable of interacting with the physical world. AI-powered robots can perceive their environment through sensors, make decisions, and manipulate objects. Robotics combines AI techniques with mechanical engineering and control systems to create autonomous and adaptive robots that perform tasks in various domains, including manufacturing, healthcare, exploration, and assistive technologies.

Knowledge Representation and Reasoning

Knowledge representation refers to structuring and organizing information so machines can understand and reason. It involves representing knowledge using symbolic languages, ontologies, or graph-based models. Reasoning techniques enable AI systems to conclude, make inferences, and apply logical rules to solve problems. Knowledge representation and reasoning are crucial in expert, decision support systems, and intelligent agents.

Data and Big Data Analytics

Data is fundamental to AI systems. AI relies on vast data to train models, validate predictions, and make informed decisions. Big data analytics involves processing and analyzing massive datasets using AI techniques, such as machine learning and data mining, to extract valuable insights, identify patterns, and support decision-making processes.

These components are interconnected and often work together in AI systems, with each playing a crucial role in different aspects of AI development and applications. By leveraging these key components, AI aims to create intelligent systems that can perceive, understand, learn, reason, and interact with the world like human intelligence.

Best Algorithm in Artificial Intelligence

Selecting the best algorithm in AI depends on several factors, including the specific task or problem, available data, computational resources, and performance requirements. It’s important to note that no single “best” algorithm universally outperforms all others in every scenario. Instead, different algorithms excel in different areas. Here are some popular and widely used algorithms in AI:

Support Vector Machines (SVM): SVM is a robust supervised learning algorithm for classification and regression tasks. It works by finding an optimal hyperplane that separates data points into different classes, maximizing the margin between them. SVMs are known for their effectiveness in handling high-dimensional data, performing well in scenarios with a clear margin of separation.

Random Forest

Random Forest is an ensemble learning algorithm that combines multiple decision trees to make predictions. Each tree is built on a random subset of features and uses a voting mechanism to determine the final prognosis. Random Forests are robust, handle high-dimensional data, and are less prone to overfitting, making them suitable for various classification and regression tasks.

Gradient Boosting Machines (GBM): GBM is an ensemble learning technique that builds multiple weak models sequentially, each attempting to correct the mistakes of its predecessors. By combining these models, GBM generates a robust predictive model. XGBoost and LightGBM are popular implementations of GBM known for their scalability, speed, and high performance in competitions and real-world applications.

Convolutional Neural Networks (CNN): CNNs are deep learning models for image and visual data analysis. These networks excel at image recognition, object detection, and image segmentation tasks. CNNs use convolutional layers to extract relevant features from input data and are known for their ability to capture spatial relationships within images.

Recurrent Neural Networks (RNN): RNNs are a class of neural networks that are particularly effective in processing sequential data, such as time series or natural language. RNNs have a feedback loop that allows them to consider the context of previous inputs when making predictions. Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) are popular RNN architectures that address the vanishing gradient problem and can model long-term dependencies.

K-means Clustering

K-means is an unsupervised learning algorithm used for clustering tasks. It groups similar data points into clusters based on their proximity to centroids. K-means is widely used for customer segmentation, image compression, and anomaly detection.

Reinforcement Learning (RL): RL is a learning paradigm where agents learn to interact with an environment to maximize rewards. Q-learning and Deep Q-Networks (DQN) are popular RL algorithms. RL is beneficial for applications involving decision-making, game-playing, robotics, and autonomous systems.

Please pay attention

It’s important to note that this list needs to be completed, and numerous other algorithms and variations are available. The best algorithm selection depends on carefully considering the problem requirements, public data, scalability, interpretability, and computational constraints. Additionally, algorithm performance can also be influenced by hyperparameter tuning, data preprocessing, feature engineering, and the overall system architecture.

AI Trends in 2023

Continued Advancements in Deep Learning

Deep learning, a subset of AI focusing on training deep neural networks, has been a significant driver of recent AI progress. This trend will likely continue in 2023, with advancements in model architectures, optimization techniques, and training methods. This can improve performance in computer vision, natural language processing, and speech recognition tasks.

Ethical and Responsible AI

As AI becomes increasingly integrated into various aspects of society, there is growing concern about ethical implications and responsible unexplainable AI. Explainability refers to the ability of AI systems to provide understandable explanations for their decisions and actions. In critical domains like healthcare, finance, and law, explainable AI is crucial for building trust, ensuring transparency, and meeting regulatory requirements. Research and development efforts in explainable AI techniques will gain momentum in 2023.

Edge Computing and AI: Edge computing involves performing data processing and AI computations closer to the data source, reducing the need for cloud connectivity and enabling real-time and low-latency AI applications. With the proliferation of Internet of Things (IoT) devices and the need for AI inference at the edge, the integration of edge computing and AI will likely be a prominent trend in 2023.

AI in Healthcare

AI has shown significant potential in revolutionizing healthcare, from early disease detection and diagnosis to personalized medicine and drug discovery. In 2023, we can expect increased adoption of AI technologies in healthcare settings, including using AI for medical imaging analysis, electronic health record analysis, virtual assistants, and telemedicine.

AI in Natural Language Processing: Natural Language Processing (NLP) has made significant strides in recent years, with advancements in machine translation, sentiment analysis, and language generation. In 2023, we can expect further progress in NLP, including developing more sophisticated language models, improved contextual understanding, and enhanced dialogue systems.

AI in Automation and Robotics

AI is playing a crucial role in automation and robotics, transforming industries such as manufacturing, logistics, and transportation. In 2023, we can anticipate increased integration of AI technologies in these areas, including AI-powered autonomous vehicles, robotic process automation, and intelligent warehouse management systems.

The goals of Artificial Intelligence

The goals of Artificial Intelligence (AI) encompass a wide range of objectives that seek to replicate, augment, or surpass human intelligence in various domains. While the ultimate goal of achieving human-level or general intelligence remains a long-term aspiration, AI is pursued for several specific purposes, which include:

Automation and Efficiency

AI aims to automate tasks that typically require human intelligence, thereby increasing efficiency, productivity, and cost-effectiveness. By leveraging AI techniques such as machine learning and robotics, organizations can streamline processes, reduce manual labor, and achieve higher levels of automation.

Decision Support and Optimization

AI can assist decision-making processes by analyzing complex data, identifying patterns, and providing valuable insights. AI models can optimize resource allocation, scheduling, logistics, and other operational aspects, enabling more informed and data-driven decision-making.

Natural Language Processing and Communication

AI endeavors to develop systems that can understand, interpret, and generate human language naturally. This goal includes language translation, speech recognition, sentiment analysis, and intelligent dialogue systems. The aim is to bridge the gap between humans and machines, facilitating seamless communication and interaction.

Personalization and Recommendation

AI seeks to create personalized experiences and recommendations tailored to individual preferences and needs. By analyzing user behavior, historical data, and contextual information, AI algorithms can provide customized product recommendations, content suggestions, and targeted advertisements, enhancing user satisfaction and engagement.

Understanding and Modeling Human Intelligence

A fundamental goal of AI is to understand and replicate human cognitive processes. By studying and modeling human intelligence, AI researchers aim to create systems that mimic human perception, reasoning, and problem-solving. This pursuit involves cognitive architectures, cognitive computing, and cognitive neuroscience.

Creativity and Innovation

AI aims to explore creativity and innovation by developing systems that generate novel ideas, art, music, and designs. Computational creativity is an interdisciplinary field that aims to advance AI systems’ ability to exhibit creative thinking and produce original outputs.

Autonomous Systems and Robotics

AI endeavors to develop autonomous systems that can operate and adapt independently in complex real-world environments. Autonomous vehicles, robots, and drones are areas where AI is applied to achieve tasks such as navigation, object recognition, and decision-making to enhance safety, efficiency, and productivity.

Scientific Discovery and Healthcare

AI can contribute to scientific research and healthcare by analyzing vast amounts of data, identifying patterns, and assisting in complex problem-solving. AI algorithms can aid in drug discovery, genomics, medical imaging analysis, disease diagnosis, and personalized medicine to advance scientific knowledge and improve healthcare outcomes.

The last word

These goals reflect the multifaceted nature of AI and the diverse domains where it is applied. While progress towards these goals is ongoing, it is essential to navigate ethical considerations, ensure responsible development and use of AI, and address potential societal impacts.